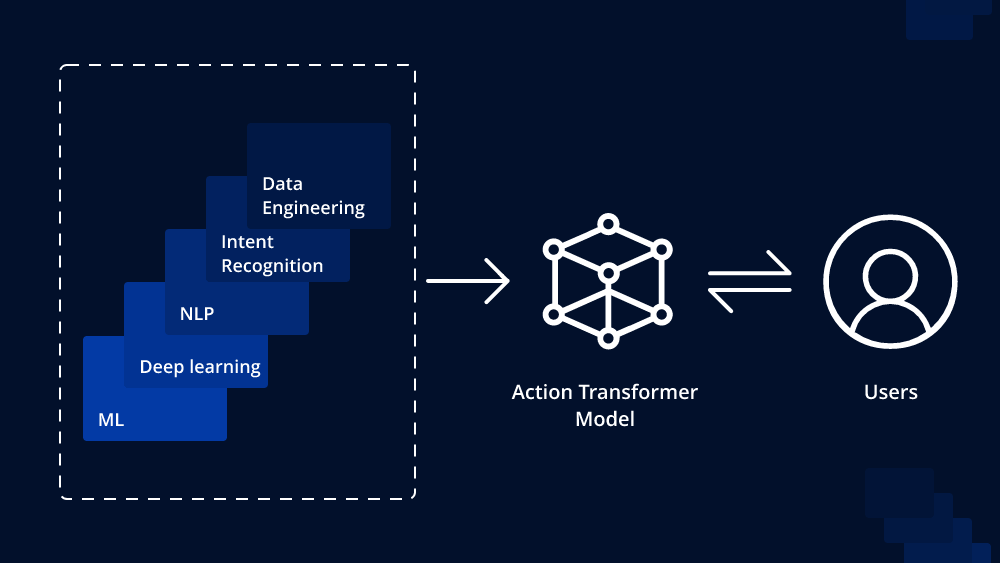

In recent years, Transformer models have revolutionized the field of natural language processing (NLP) and have been successfully applied to various tasks such as machine translation, text generation, and sentiment analysis. The success of Transformer models is mainly attributed to their ability to effectively capture long-range dependencies in sequential data. Building upon this foundation, researchers have introduced the concept of Action Transformer models, which extend the capabilities of traditional Transformers to handle sequential decision-making tasks. In this article, we will delve into the concept of Action Transformers and explore how to implement one.

Understanding Action Transformer Models:

Action Transformer models are an extension of the standard Transformer architecture that caters to sequential decision-making tasks. In traditional NLP tasks, such as language translation, the input is a fixed sequence, and the Transformer processes it to generate an output sequence. However, in real-world applications like robotics, game playing, or autonomous vehicles, decision-making is not confined to a fixed sequence. Instead, actions need to be taken at each step, and the outcomes of these actions influence subsequent decisions.

Action Transformers address this challenge by introducing the concept of “actions” and “action spaces” into the Transformer model. The model’s objective is to learn a policy that maps the current state to a probability distribution over possible actions. At each step, an action is sampled from this distribution, executed in the environment, and the process continues iteratively. This way, the model effectively learns to make sequential decisions based on the environment’s state and the actions it has taken in the past.

Implementing an Action Transformer:

Implementing an Action Transformer involves several key steps. Here, we will outline a high-level guide to help you get started:

1. Define the Environment:

The first step is to define the environment in which the sequential decision-making will take place. The environment represents the context in which the model operates, and it provides observations or feedback to the model at each step. For instance, if you are building a game-playing AI, the environment could be the game board, and the observations could be the current state of the board and the available moves.

2. Define the Action Space:

Next, you need to define the action space for the environment. The action space comprises all the possible actions the model can take at each step. These actions should be well-defined and relevant to the task at hand. In the game-playing example, the action space would consist of all legal moves that a player can make.

3. Data Collection:

To train the Action Transformer, you will need data that contains sequences of states, actions taken, and rewards received. This data can be collected by running simulations of the environment with random or predefined policies. The collected data serves as the training set for the model.

4. Model Architecture:

The core of the Action Transformer model is the same as the traditional Transformer architecture, consisting of encoder and decoder layers. The encoder processes the input states, while the decoder generates the action probabilities. The encoder and decoder layers are trained jointly to maximize the expected reward, incorporating a reinforcement learning approach.

5. Reinforcement Learning:

Reinforcement Learning (RL) is used to optimize the Action Transformer model. At each step, the model samples an action from the predicted probability distribution and executes it in the environment. The environment returns a reward, indicating how good or bad the action was. The RL algorithm uses these rewards to update the model’s parameters, encouraging it to take better actions in the future.

6. Training and Evaluation:

During training, the model iteratively interacts with the environment, samples actions, receives rewards, and updates its parameters using the RL algorithm. The training process continues until the model converges to a satisfactory policy. Evaluation is done on a separate set of data to assess the model’s performance in decision-making tasks.

In conclusion, Action Transformer models offer a powerful framework for addressing sequential decision-making tasks in various domains. By extending the Transformer architecture to incorporate actions and action spaces, these models can effectively learn policies to make intelligent and informed decisions based on their environment. Implementing an Action Transformer involves defining the environment and action space, collecting data, designing the model architecture, and employing reinforcement learning to train the model. With the increasing interest in sequential decision-making problems, Action Transformers hold significant promise for pushing the boundaries of AI applications in the real world.

To Learn More:- https://www.leewayhertz.com/action-transformer-model/